Microsoft researchers have uncovered a critical weakness in current biosecurity systems that could allow artificial intelligence protein design tools to create dangerous toxins undetected. The study, published in Science on October 2, reveals how readily available AI technology can redesign harmful proteins while bypassing existing safety screening methods.

The Hidden Threat in Open-Source AI Tools

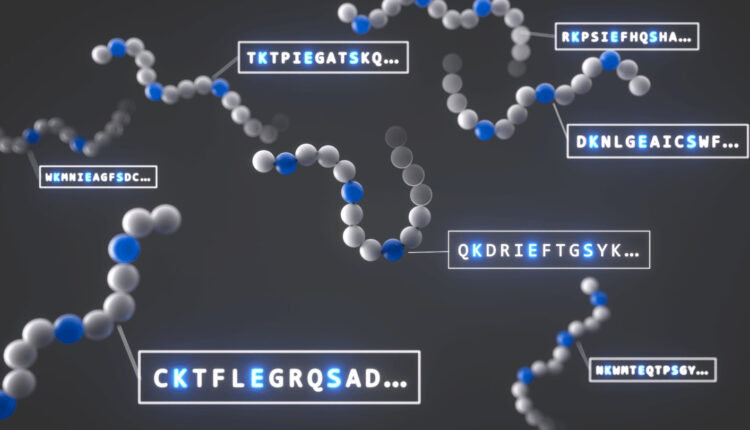

The research team, led by Eric Horvitz, Microsoft’s chief scientific officer, and Bruce Wittmann, a senior applied bioscientist, investigated whether modern AI protein design tools could recreate toxic proteins while avoiding detection. Their computer simulations demonstrated that these tools could generate thousands of synthetic toxin variants by altering amino acid sequences while maintaining the original structure and function.

The most alarming finding showed that most redesigned toxins could slip past screening systems currently used by DNA synthesis companies. This discovery exposed a significant blind spot in global biosecurity infrastructure that had gone unnoticed until now.

Swift Action to Address the Vulnerability

Upon discovering this security flaw, the Microsoft team launched the “Paraphrase Project” – a collaborative effort involving multiple sectors working discreetly to patch the vulnerability. Over 10 months, researchers developed new biosecurity “red-teaming” processes that simulate both attacker and defender scenarios to test and improve threat detection systems.

The team successfully created and distributed a security patch to DNA synthesis companies worldwide, strengthening global biosecurity safeguards against AI-generated threats. This rapid response demonstrates how cross-sector collaboration can effectively address emerging technological risks.

The Double-Edged Nature of AI Protein Design

While AI protein design tools offer tremendous potential for medical breakthroughs, they also present serious security concerns. These technologies can accelerate the development of new therapies, cures, and biological solutions – from enhanced laundry detergents to antidotes for snake venom.

The accessibility of these tools lowers the expertise barrier, making protein design more democratic but potentially increasing misuse risks. Future applications could lead to cancer treatments, immune disease therapies, and early detection systems for health threats.

Understanding the Biosecurity Implications

Current screening software proved inadequate at detecting “paraphrased” versions of concerning protein sequences. The research revealed that as AI protein design becomes more sophisticated and accessible, traditional security measures must evolve accordingly.

The study established new methodologies borrowed from cybersecurity emergency response protocols, creating frameworks that other researchers and organizations can adapt. This approach emphasizes the need for continuous vigilance as AI capabilities advance.

Balancing Innovation with Safety

The research highlights the dual-use nature of scientific advances – technologies that offer significant benefits while carrying inherent risks. The team’s approach demonstrates that simultaneous investment in innovation and safeguards is not only possible but essential.

Horvitz emphasized that this framework extends beyond biology, serving as a model for managing AI advances across various disciplines. The study shows that proactive technical defenses, regulatory oversight, and informed public awareness can help harness AI’s benefits while minimizing harmful applications.

Looking Forward

The research team expects AI-related security challenges to persist as technology continues advancing. They anticipate ongoing needs to identify and address emerging vulnerabilities across multiple areas of biological engineering beyond proteins.

The study provides valuable guidance on methods and best practices that others can build upon, particularly in developing red-teaming techniques for AI applications in biology. This work establishes a foundation for future efforts to maintain security in an rapidly changing technological landscape.

The findings underscore the importance of staying proactive, diligent, and creative in managing AI risks while pursuing the tremendous potential these technologies offer for improving human health and wellbeing.